How much traffic can a pre-rendered Next.js site really handle?

I've often said things like "A pre-rendered site can easily serve hundreds of concurrent users", because, well, I've never seen one fail.

But how many can it really handle? Could my site actually handle a traffic surge from landing on something like the Hacker News frontpage? How does it compare to server-side rendering? And is it actually worth jumping through hoops to avoid SSR?

I looked around for hard data on Next.js performance, but solid numbers were surprisingly hard to find. So, I ran some tests on my own site, and the results weren't what I expected. And in perfect timing, my article on Google Translate interfering with React hit the Hacker News frontpage the very next day.

Right after I discovered (spoiler alert) my site probably couldn't handle it.

A pre-rendered site on a VPS

The first thing I wanted to find out was whether my site could handle a surge in visitors from something like hitting the frontpage of Hacker News.

To test this, I wrote a basic k6 load testing script to measure the max requests per second my server could handle. The script was as simple as possible; it repeatedly requests a single page as many times as possible, while waiting for each request to complete. You can find the full test setup in the addendum.

Running this script, a single Next.js instance serving my fully pre-rendered homepage on an X4 BladeVPS from TransIP could handle 193 requests per second, with only 63% of the requests responding within 500ms.

One key thing to underline: each request in the test is just a single call to the pre-rendered Next.js page. No assets are requested. A real visitor would need 60+ additional requests. That means as few as three simultaneous visitors could push the server dangerously close to its limit.

This was far lower than I expected.

The anatomy of a visit

To understand why this site needs 60+ requests, let's take a look at all of the requests that happen when someone visits my homepage:

- DNS lookup and SSL handshake.

- Initial request (1: 20.3 kB): The browser requests the HTML document.

- CSS (1: 1.6 kB): The browser parses the HTML and requests the essential CSS file.

At this point the page is fully styled and visible, and users can interact with most elements, even before JavaScript loads. The main thing still missing are the:

- Above-the-fold images (5: 6.8 kB): The browser requests the images above the fold.

With typically only a few images above-the-fold, the total up to this point is usually between 50 kB and 100 kB. This is when the visitor starts seeing a complete page. As most interactive elements are implemented natively (with the platform), users can also already interact with the page (e.g. navigate around).

The rest of the requests are "deferred"; they're low priority and do not block rendering. This includes the majority of the requests:

- Analytics (1: 2.5 kB): The browser requests the Plausible script.

- JavaScript (13: 176.8 kB): The browser requests the JavaScript needed for the main page.

- Lazy-loaded images (1: 22.8 kB): The browser requests lazy-loaded images that are (partly) above the fold.

- Placeholder data (17: 11.9 kB): The browser requests placeholder data for images under the fold.

- Favicons (2: 52.2 kB): The browser requests favicons of various sizes.

- Preloaded resources (22: 136.1 kB): The browser preloads resources for potential navigation (to make them instant).

The total ends up being 63 requests and 434.5 kB.

This shows Next.js introduces a lot of extra requests and traffic compared to a more standard server-rendered site, or a non-Next.js static site. While this gives me, as a developer, full control over the user experience (UX) and an excellent developer experience (DX), it also means the user and server have to handle a lot more traffic. Then again, I don't think <500 kB is too much traffic for a modern website, especially since the page is already usable after the first 100 kB.

React Server Components (RSC) could improve this slightly by removing some of the client-side JavaScript. Unfortunately the platform isn't very mature yet and still has many issues. I'm willing to deal with this for the sake of optimal performance, but the main blocker is still its lack of CSS-in-JS support. As soon as that's fixed (i.e. PigmentCSS is released), I'll be all over it.

Scaling up

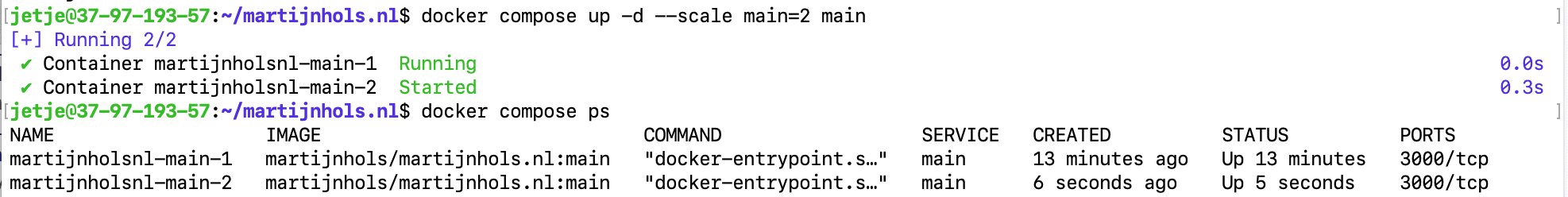

After seeing the disappointing performance of my VPS, I tried scaling up my Docker container (docker compose up -d --scale main=2 main). Instead of a single container with one Node.js process handling all requests, I now had one process per CPU core (2 cores total).

I use nginx-proxy in front of my Docker containers. It's awesome. It automatically load balances if there are multiple containers listening to the same domain.

This barely helped. The VPS could now handle 275 requests per second (↑42.49%), but still with only 76% of requests responding within 500ms. Still nowhere near enough to handle a full-blown "hug of death". And I really don't want my website to go down.

I also tested other files to get a sense for how the server would handle the additional 60+ requests. Here's what I found:

| File | RPS | Bottleneck |

|---|---|---|

| Homepage (20.3 kB) | 275 RPS | CPU |

| Blog article (13.9 kB) | 440 RPS | CPU |

| Large JS chunk (46.8 kB) | 203 RPS | CPU |

| Average JS chunk (6.1 kB) | 833 RPS | CPU |

| Small JS chunk (745 B) | 1,961 RPS | CPU |

| Image (17.7 kB) | 1,425 RPS | CPU |

| Statically generated RSS-feed (2.4 kB) | 849 RPS | CPU |

One interesting thing to note; scaling only resulted in a ↑42.49% increase in RPS on the homepage because Next.js already does basic multi-threading for GZIP compression. This means the original single container was already benefiting slightly from the second CPU core even before scaling.

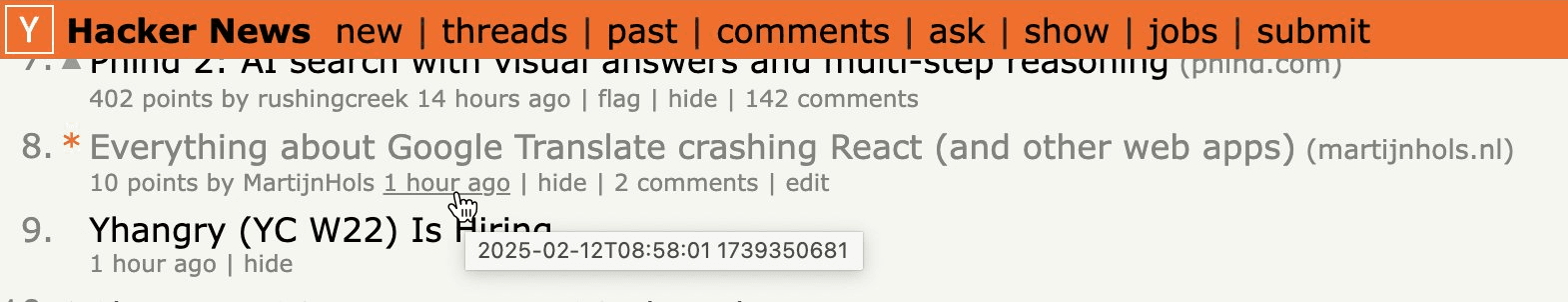

A surprise traffic spike

In perfect timing, the day after I found out my server probably couldn't handle a big hug of death, my article Everything about Google Translate crashing React (and other web apps) hit the Hacker News frontpage.

This came as a complete surprise, as I had last posted the article two days earlier. Turns out Hacker News can bump older posts straight to the frontpage for a second chance. I was thrilled my article (that I had spent a lot of time on) was getting attention, but it happening right after I realized my site might not be up to task was extra stressful.

Luckily, the traffic wasn't as intense as I had expected from the Hacker News frontpage, and my VPS held out. But it made me wonder, how bad can a hug of death get? That's something I'll dig into in a future article, along with more stats on my own experience.

Finding a replacement

Frustrated with the performance of my VPS, I went looking for a better solution.

Cloudflare

The easy answer would be to put Cloudflare in front of my server and let it cache the hell out of the static content. I've used this setup before; back when I ran WoWAnalyzer, a single server paired with Cloudflare effortlessly handled over 550,000 unique visitors in a month.

It's a nice proposition; with Cloudflare, my server would only need to handle the initial request, while Cloudflare takes care of the other 60. Since my VPS can already handle 200 visitors per second, that would mean problem solved.

But unfortunately, Cloudflare isn't an option for me. I care about (y)our privacy and I don't want Cloudflare sitting between my visitors and my site, logging every request and potentially tracking users across the web. Plus, in the current political climate, there are other political concerns.

I want to stick exclusively to EU-based services.

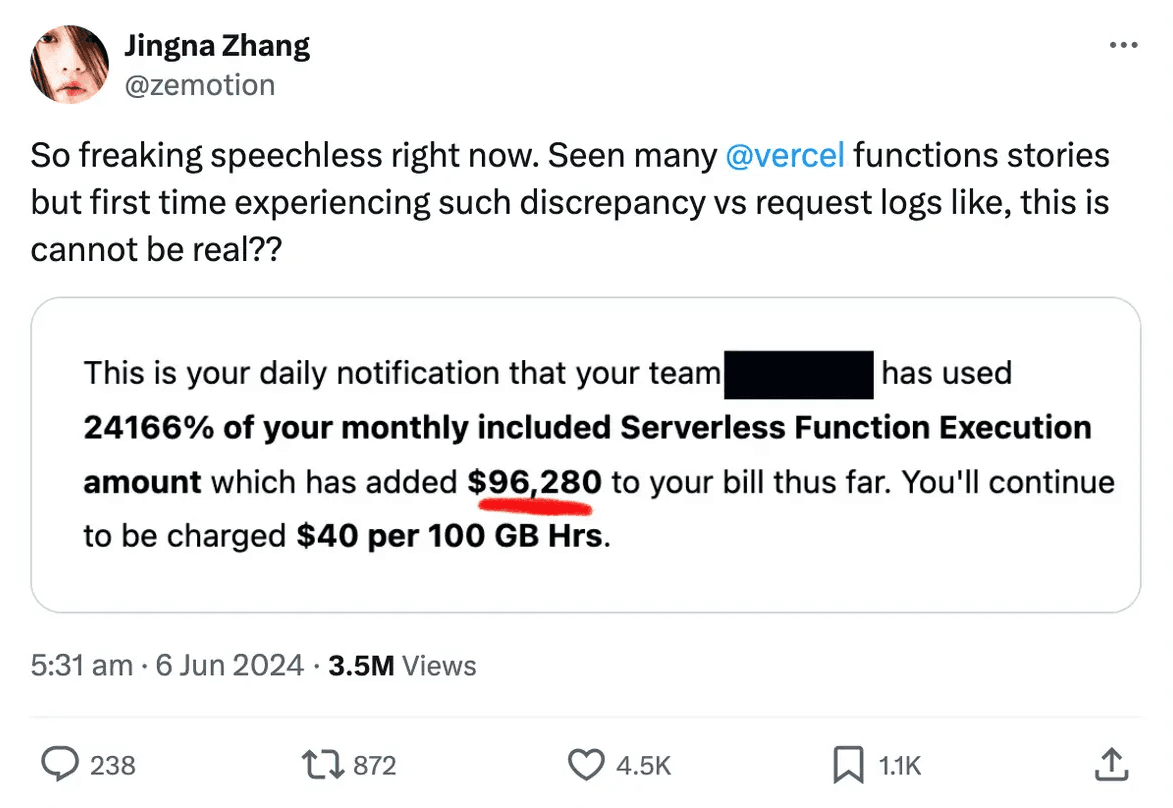

Vercel

Another obvious option would be Vercel (or an EU equivalent). But honestly, that sounds like a nightmare to me. It's not cheap, the per-site pricing means I'd either be locked in forever or have to tear things down when stopping a project, and it has unpredictable limits with extra costs on every single thing they could think of to charge extra for. This turns going viral from a technical challenge into worrying about what the total of the next bill is going to be (which could be over $100k!). My current setup has a fixed cost, and I really like that.

I do pay for Plausible, which also has usage-based pricing. But I don't mind paying for Plausible for three reasons: their pricing steps are predictable and reasonable, they don't charge for occasional traffic spikes, and it's non-essential so I can drop them at any time. I might try self-hosting in the future to save some money, but then again the last time I did that with a similar analytics product, I never got it to handle even a fraction of the traffic that was thrown at it.

Home server

A home server would be the next best thing, but it isn't really an option for me. I don't have access to fiber to provide good response times, I don't want my IP to be public, my IP is dynamic, and my internet & power aren't as reliable as I would want a server's to be. This may be a really good option for you though, especially if data integrity is not important and you're open to using Cloudflare.

VPS

There is probably a better VPS for the same or less money, but I don't want to spend time researching providers, figuring out if they're operated from the EU, and then paying just to test their servers. Besides, it's unlikely a different VPS would make a huge difference.

Dedicated server

With those constraints, I reckon a dedicated server is going to be hard to beat. I know it's kinda overkill for this site, but it's not the only thing I use my server for. I need a good home for my projects and I want the barrier for launching something new to be as low as possible. A server that I can freely mess around with would be ideal. It eliminates a barrier to my creativity.

Plus, it's a good skill to keep sharp.

Dedicated server

For years, I rented a dedicated server from So You Start, a budget brand of OVH, to host WoWAnalyzer. That server (in combination with Cloudflare) effortlessly handled over 550,000 unique visitors in a single month, processing about 75 million requests without breaking a sweat. So, I figured I'd give them another shot.

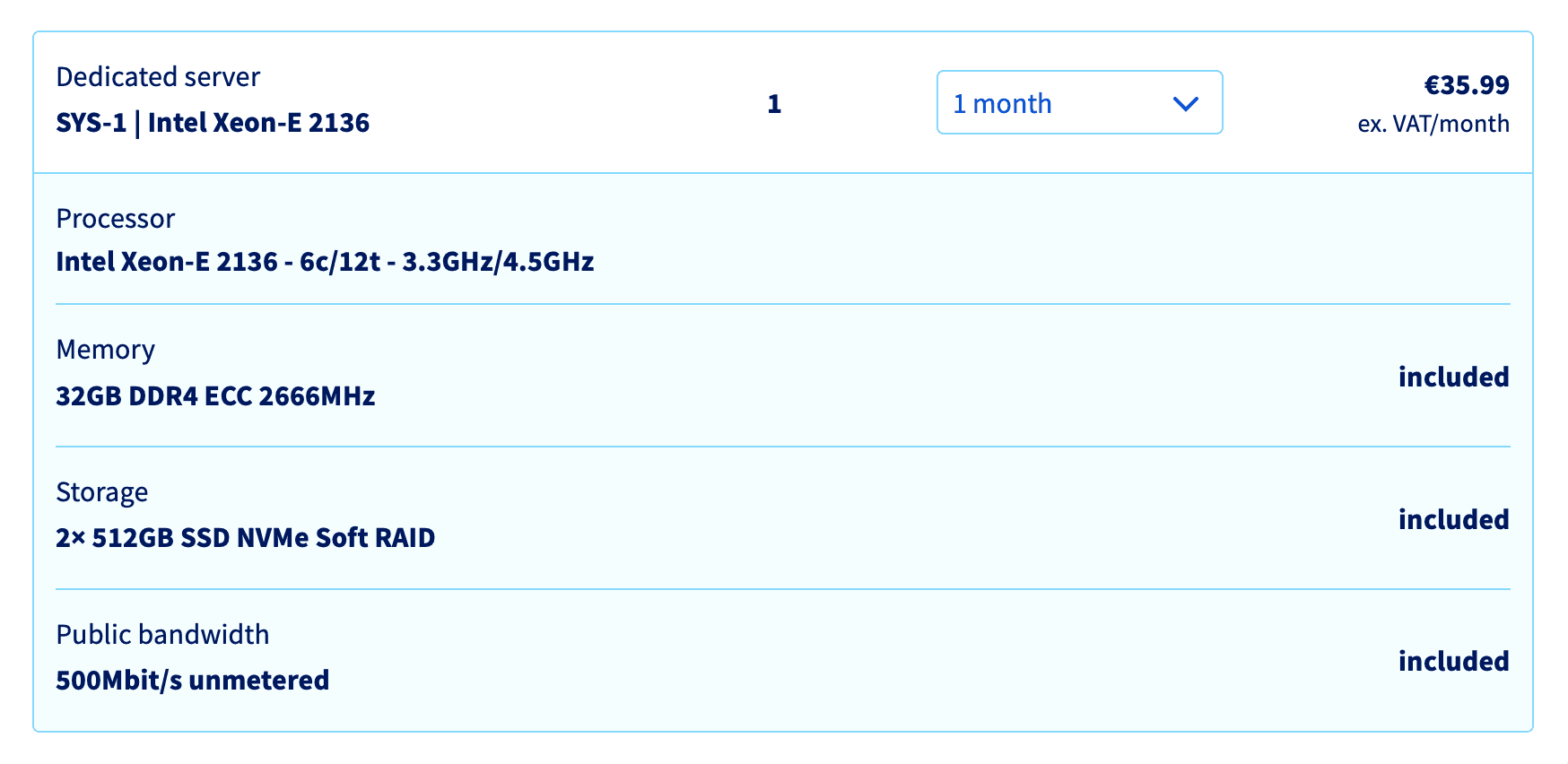

Their servers are very affordable. While they use older OVH hardware (2+ year old), their value is excellent. After a quick comparison, I went with the cheapest option; 36 euros per month for a 6 core/12 thread Intel Xeon-E 2136 - only 8 euros more than the VPS used to be.

This upgrade made a huge difference.

Running 12 instances (one per thread), the server can handle 2,330 requests per second to the homepage (with 99% responding within 500ms).

I also ran another round of tests on some other files to see how they performed:

| File | RPS | Difference | Bottleneck |

|---|---|---|---|

| Homepage (20.3 kB) | 2,330 RPS | ↑747.27% | CPU |

| Blog article (13.9 kB) | 3,950 RPS | ↑797.73% | CPU |

| Large JS chunk (46.8 kB) | 1,249 RPS | ↑515.27% | Network |

| Average JS chunk (6.1 kB) | 7,627 RPS | ↑815.61% | CPU |

| Small JS chunk (730 B) | 16,175 RPS | ↑724.83% | CPU |

| Image (17.7 kB) | 3,216 RPS | ↑125.68% | Network |

| Statically generated RSS-feed (2.4 kB) | 9,395 RPS | ↑1006.60% | CPU |

This time some files ran into network bottlenecks. This is because budget providers like So You Start usually come with lower bandwidth limits. In this case the max throughput of the server is 500 Mbps.

This is probably more than enough to handle anything real users can throw at it.

The average file size across all files is close to the size of the "Average JS chunk" (6.1 kB). Based on that, this server can support up to 7,600 requests per second. If we divide that by 60+ requests needed to load the homepage, we're looking at being able to handle a constant stream of over 125 visitors per second.

And blog posts tend to require fewer requests than the homepage (due to there being fewer images), meaning this setup can probably handle even more.

This is a setup I can rely on.

SSR performance

Now that we have a solid baseline for static site performance, let's tackle the other big question: how does server-side rendering (SSR) compare to pre-rendering?

A tricky part of benchmarking SSR is deciding what to measure. SSR pages often query databases (e.g. increment view count), call APIs (e.g. Algolia Search), or handling pagination. If we include that, the test would mostly reflect the overhead of that integration rather than SSR itself.

To give SSR the best possible shot, I'll focus purely on what happens when rendering a React page with some synchronous logic on the server - with no additional API or database interactions.

Each test case is a little different. The homepage simply renders the React component tree on the server. The blog article does a tiny bit of logic (calculating the related articles) before rendering. The RSS feed on the other hand, is dynamically generated using the rss library. Since SSR might affect each of these differently, I expect some variation in the results, making for an interesting comparison.

Without further ado, the results:

| File | RPS | Difference | Bottleneck |

|---|---|---|---|

| Homepage (20.3 kB) | 271 RPS | ↓88.37% | CPU |

| Blog article (13.9 kB) | 884 RPS | ↓77.62% | CPU |

| Statically generated RSS-feed (2.3 kB) | 4,521 RPS | ↓51.88% | CPU |

I needed a few takes to process this one.

At first glance, 271 RPS on the homepage doesn't seem that bad. Especially considering static assets (CSS, JS, images) are still static and their RPS will be largely unchanged.

But it's a different story when you consider that this is all a dedicated server, that is running nothing else, can handle. It maxes out at almost 90% fewer requests per second than static generation, and if that wasn't bad enough, only 74% of the requests to the homepage responded within 500ms (the median response time was 1.51 seconds, while 1 in 10 took over 3 seconds).

SSR is very slow to boot.

For good measure, I ran the same test on the VPS to see how it would hold up:

| File | RPS | Difference | Bottleneck |

|---|---|---|---|

| Homepage (20.3 kB) | 34 RPS | ↓87.64% | CPU |

| Blog article (13.9 kB) | 95 RPS | ↓78.41% | CPU |

| Statically generated RSS-feed (2.3 kB) | 490 RPS | ↓42.29% | CPU |

Oof.

The numbers make it clear; using SSR on a page that you're hoping to get a lot of visitors on is just asking for trouble.

Final thoughts

This whole journey started with a simple question: can my site handle a big traffic surge? I always assumed pre-rendering was enough for anything, but I'm glad I put that assumption to the test. Turns out, my original VPS wasn't nearly as resilient as I thought. Just three visitors per second could have pushed it over its limits. That wasn't what I expected.

A dedicated server really is a massive upgrade over a VPS. And while it doesn’t have to cost much more, bandwidth limits can be a hidden bottleneck. No point spending extra on better CPU if your server's network can't keep up.

At this point, I think my setup can probably handle more than I'll ever really need.

However, if I'm honest, that's still just speculation. I don't actually know for a fact how big a hug of death can get. But that's something for another time; I'll cover that, and stats of my own experience landing on the Hacker News frontpage, in a future article.

As for SSR, we now have hard numbers backing up the claim that pre-rendering scales far better.

I'm still curious how much further the server can be pushed - without Next.js. In another upcoming article, I'll test whether replacing the Next.js server with Nginx and optimizing compression makes a big difference. This should show us whether the Next.js server is efficient in the first place.

Want to see those articles when they drop? Add my RSS feed to your reader or follow me on Twitter, BlueSky or LinkedIn.

Addendum

I originally planned to include a deep dive into everything I used and all the findings from my tests, but since this article has already taken forever to write (I had to split it into three parts), I cut this short.

The k6 script:

import http from 'k6/http'import { check } from 'k6'

const url = 'https://example.com'// VUs are set to the amount needed to achieve 99% on the <500ms response time// with a minimum of 200 to simulate a realistic peak amount of users.// More VUs does not always mean more RPS, but it can lead to slower responses.const vus = 200

export const options = { // Skip decompression to greatly reduce CPU usage. This prevents throttling on // my i9 MBP, making tests more reliable. discardResponseBodies: true, scenarios: { rps: { executor: 'ramping-vus', stages: [ // A quick ramp up to max VUs to simulate a sudden spike in traffic. // "constant-vus" leads to connection errors (DDOS protection?), so this // ramp up is a bit more robust and realistic. { duration: '5s', target: vus }, { duration: '1m', target: vus }, ], // Interrupt tests running longer than 1sec at the end of the test so that // they don't skew the results. gracefulStop: '1s', }, },}

export default function () { const res = http.get(url, { headers: { 'Accept-Encoding': 'br, gzip', accept: 'image/webp', }, })

check(res, { 'status is 200': (r) => r.status === 200, 'response time is below 500ms': (r) => r.timings.duration < 500, })}

Testing Environment & Findings

- Tests were run on a top-specced 2019 Intel MacBook Pro, connected via Ethernet (USB-C adapter), with ~760 Mbps download, exceeding the bandwidth of the dedicated server.

- Network adapters can bottleneck performance during benchmarking. I made sure mine wasn't a limiting factor.

- I let temperatures drop between tests to minimize any impact from throttling (prety sure it was insignificant).

- I ended up running most tests at least three times (probably more) due to testing issues or changes to the script. The results were pretty consistent.

- I focused on <500ms response times because that's about the patience I can muster when I open a new site for the first time.

- OVH claims "burst available to absorb the occasional peak traffic”, but I saw no evidence of it existing.

- I did compression and Nginx tests too with some interesting findings (it's not as significant as you may expect), but they didn't make the cut for this article. I'll share that later.

- I did dive into the possible hug of death traffic spike from Hacker News and Reddit, but had to cut it to focus this article and not make it too long. I'll share that later too (probably after this one but no promises).

Files tested

I tested on the following files and URLs. The hashes may not exist anymore, but you can find similar files on this page.

- homepage: https://next.martijnhols.nl/

- Blog article: The European Accessibility Act for websites and apps: https://next.martijnhols.nl/blog/the-european-accessibility-act-for-websites-and-apps

- Large JS chunk: https://next.martijnhols.nl/_next/static/chunks/main-3e0600cd4aa10073.js

- Average JS chunk: https://next.martijnhols.nl/_next/static/chunks/102-38160eae18c28218.js

- Small JS chunk: https://next.martijnhols.nl/_next/static/tKviHia3kWxxoRr-Rb42G/_ssgManifest.js

- Image: https://next.martijnhols.nl/_next/image?url=%2F_next%2Fstatic%2Fmedia%2Faccessibility-toolkit.80a1e3d7.png&w=384&q=75

- Statically generated RSS-feed: https://next.martijnhols.nl/rss.xml

Please don't hit these URLs with your load tests.

next in this case stands for next (major) version, not Next.js. This entire

site runs on Next.js.